Engineering with AI at Unit 410

By Drew Rothstein, President

At Unit 410, we are early adopters of tools that help us achieve our goals and mission while enabling us to keep our team small and focused. We have used various AI chat agents over the past few years along with integrated IDE agents. We invested in running our own multi-model tooling quite early, and the team has really enjoyed the ability to select from 10+ models for their queries.

|

|

HuggingFace ChatUI Deployed Internally

Development Workflow

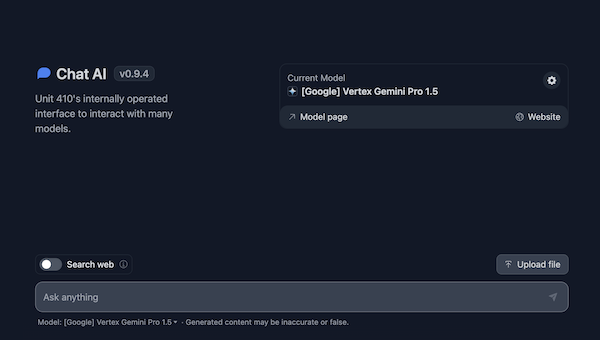

Our development workflow has evolved from various editors with GitHub/Microsoft CoPilot integration to primarily VSCode and Cursor with multi-model capabilities. It is important that we have a standard path for engineers but do not unreasonably restrict tools. We encourage regularly pushing the boundaries with new tools by having the team propose new ideas through weekly demo days and sharing of RFCs.

Cursor IDE’s Multi-model Built-in Chat

Code Review

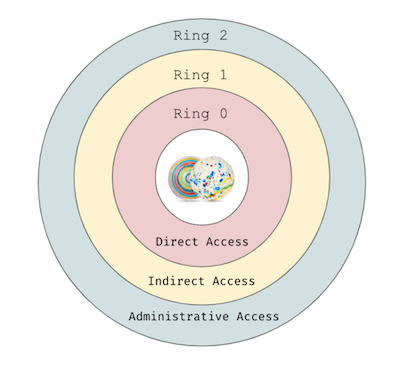

Code review has become an area of focus for AI including the funding of new companies. Code review at Unit 410 depends on the codebase involved and the service’s level of access.

Access Levels

It is still quite early in the development of Code Review tools that utilize LLMs and provide a useful interface for review. We have evaluated several tools and found most need more evolution before their interaction is functional enough to be useful. It is not that LLMs can’t build the required context or useful responses - it is that the UX is very hard to do well. We also experimented with building our own but learned quite quickly the cost to build and maintain would exceed our build vs. buy analysis.

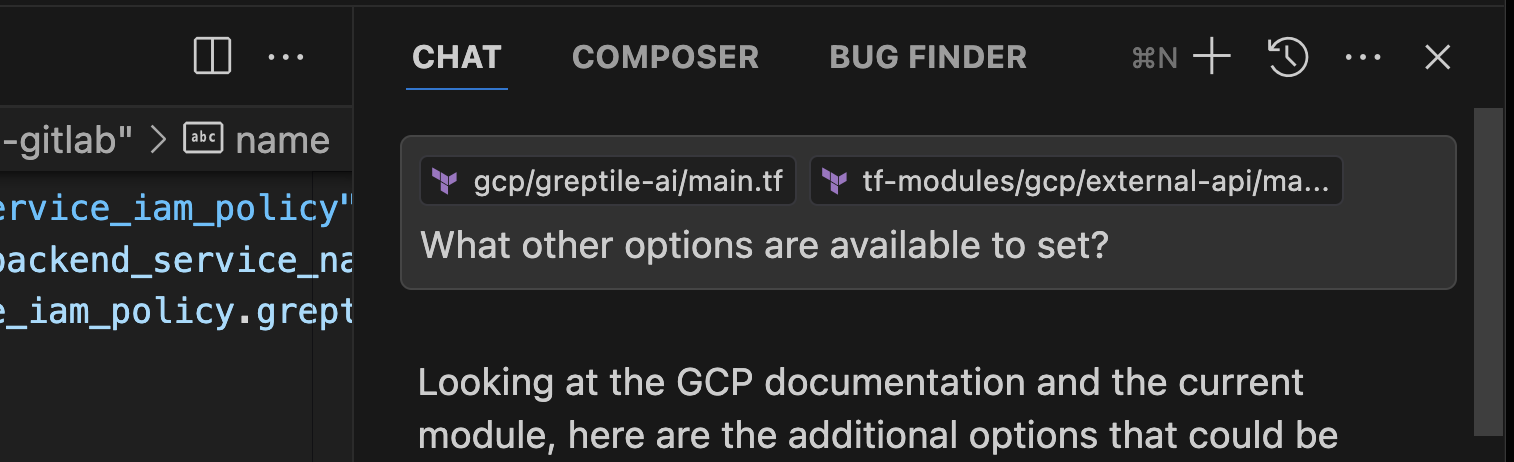

Greptile

We recently reviewed Greptile which focuses on solving the UX problem - the biggest challenge in a functional code review tool. They are also a nimble team, and our ability to shape what they are building / solving was appealing to us.

We chose to install it in our environment instead of utilizing their SaaS product. We commonly make this choice as part of our initial risk review. It increases the cost of initial deployment and long-term maintenance but in-turn gives us a lot more comfort in the security and perimeter of the tooling.

Deployment

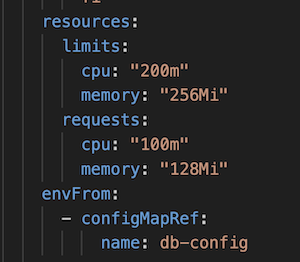

Like most startups, they choose Kubernetes and Helm charts as their initial starting point for deployment of their multi-service architecture. While some may disagree with this as a starting point, it isn’t too difficult to break down into components that are easily deployed into our environment. In reviewing their service architecture and resource limits, it was clear this could be a single docker-compose file on a pretty small VM (couple CPUs, couple GB of RAM) with no issues.

Example Resource Definition in Helm Charts

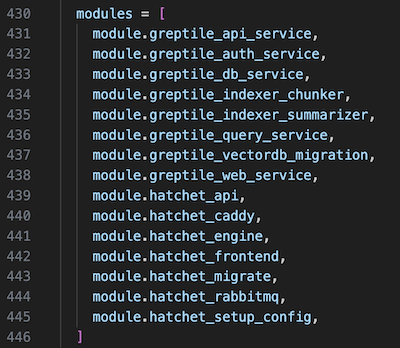

We chose to deploy their services in docker on a single VM, except for the integrations which we placed on a separate very small VM.

Service Modules

The separation of the monolith (yes, I know it is actually “microservices”) from the integrations was intentional in three ways:

- It makes the traffic paths / patterns much easier to understand.

- It enables setting up load balancers with very clear mappings vs. co-mingling user web traffic with API service traffic.

- It keeps the architecture and

terraforma lot cleaner and easier to understand.

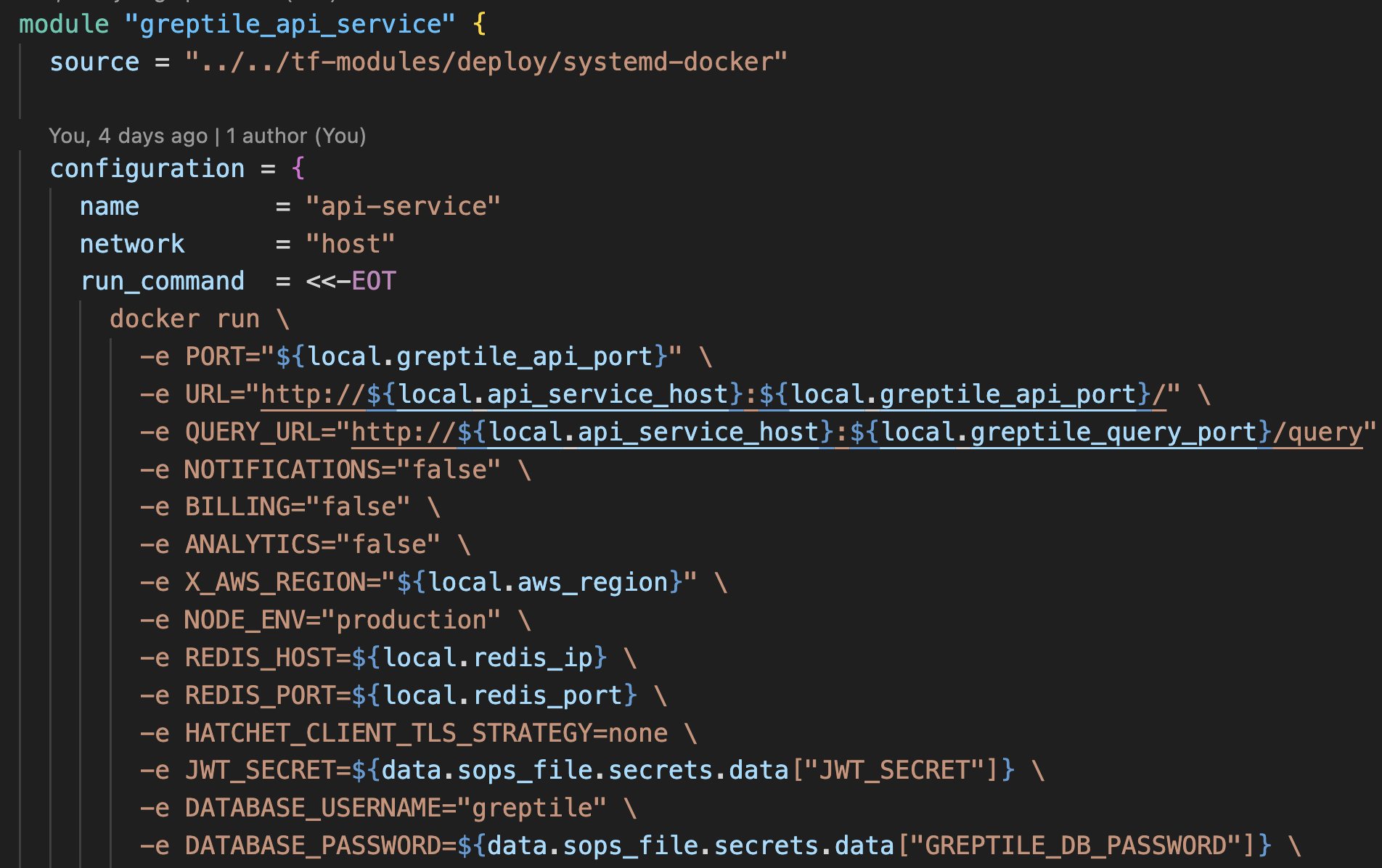

We codified their services with terraform quite easily from their Helm charts. The biggest challenge was figuring out what each Environment Variable actually meant and how it was used. Over time, I expect they will develop documentation to assist with deployments as they learn / grow – building software for others to deploy in their environments with their idioms is not a small feat.

Each service gets its own module using our shared docker configuration:

Per-service Configuration

Configuration

For AuthN, we chose to use Google IAP as it is trivial to configure in terraform and prevents us from having to run and maintain an auth-specific service. They bundle boxyhq/jackson for folks if that is a better path. We chose a different path but may re-evaluate over time.

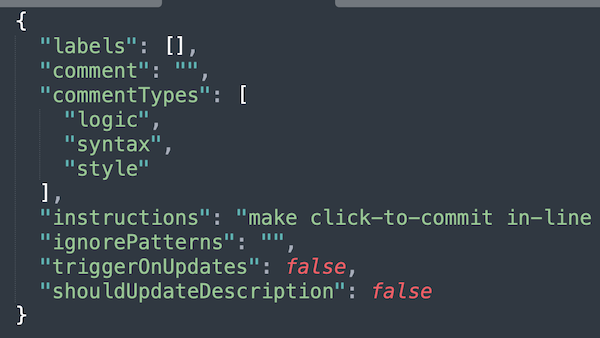

After adding a few DNS entries, our automated certificate generation took over, and we were up and ready to configure the tooling itself. We saved some of our configuration from testing their SaaS product on a set of public repositories.

Greptile Settings JSON

One feature we quite like is that settings (including instructions) are exportable. This enables us to invest and test changes to an ever-growing instructions text and not worry about changes over time or losing them.

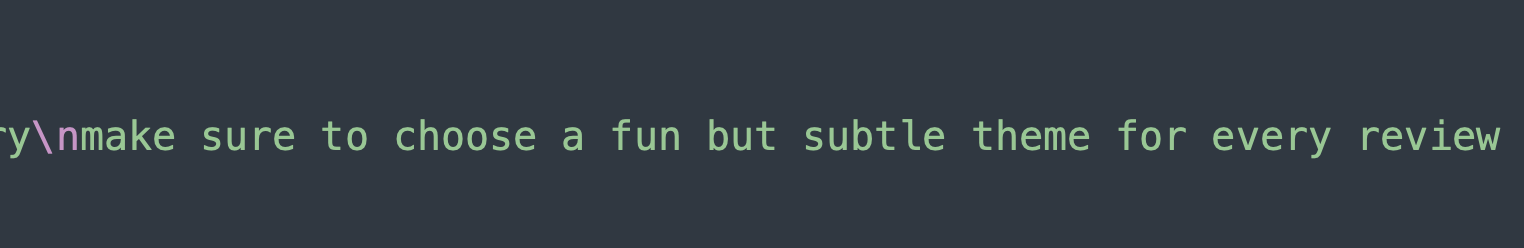

This also enables us to inject some fun into our process:

LLM Instructions

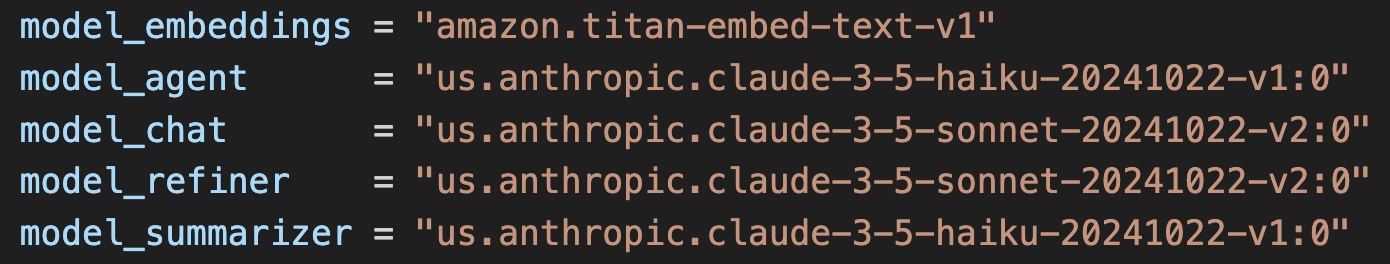

Model Configuration

We look forward to experimenting with different models and providers. The flexibility to select different model providers (and thus, models) that are OpenAI-compatible is table stakes given the speed at which models are evolving. This is trivial with our Greptile tooling.

Model Configuration

Result

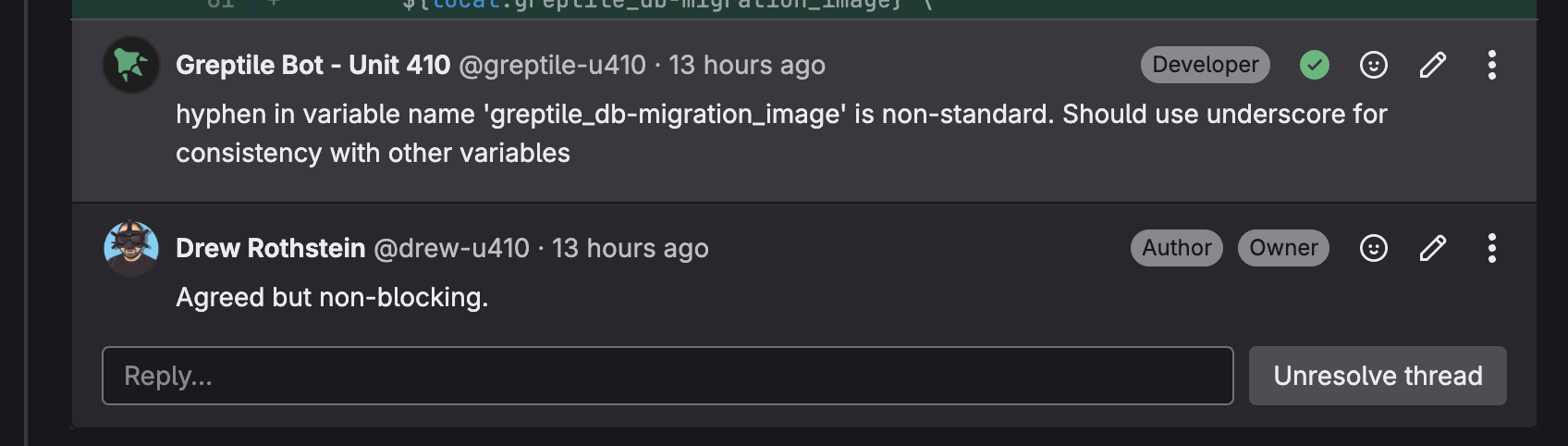

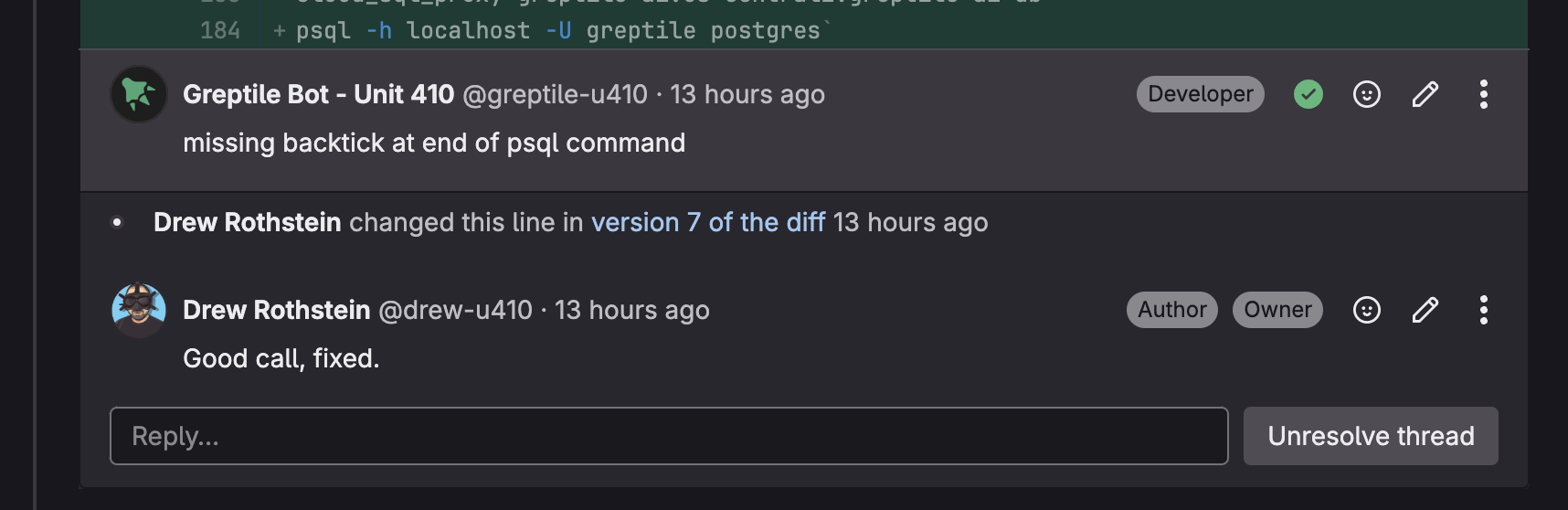

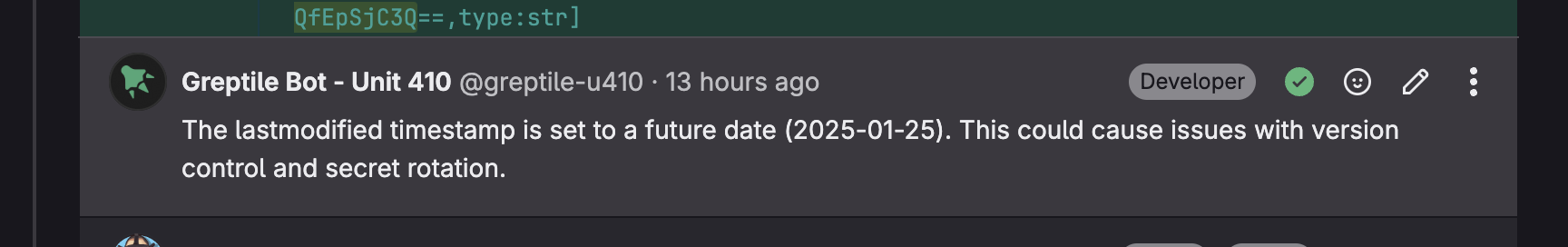

It is still early but the team is adjusting to the robot reviewer in code reviews. It isn’t always “right” but it is reasonable. When it isn’t “right”, there are usually explainable / understood reasons as to why - some of these can be tuned over time through better instructions. In some of these cases, which human reviewers may / may not point out, it does provide a reasonable pause to consider which can be helpful.

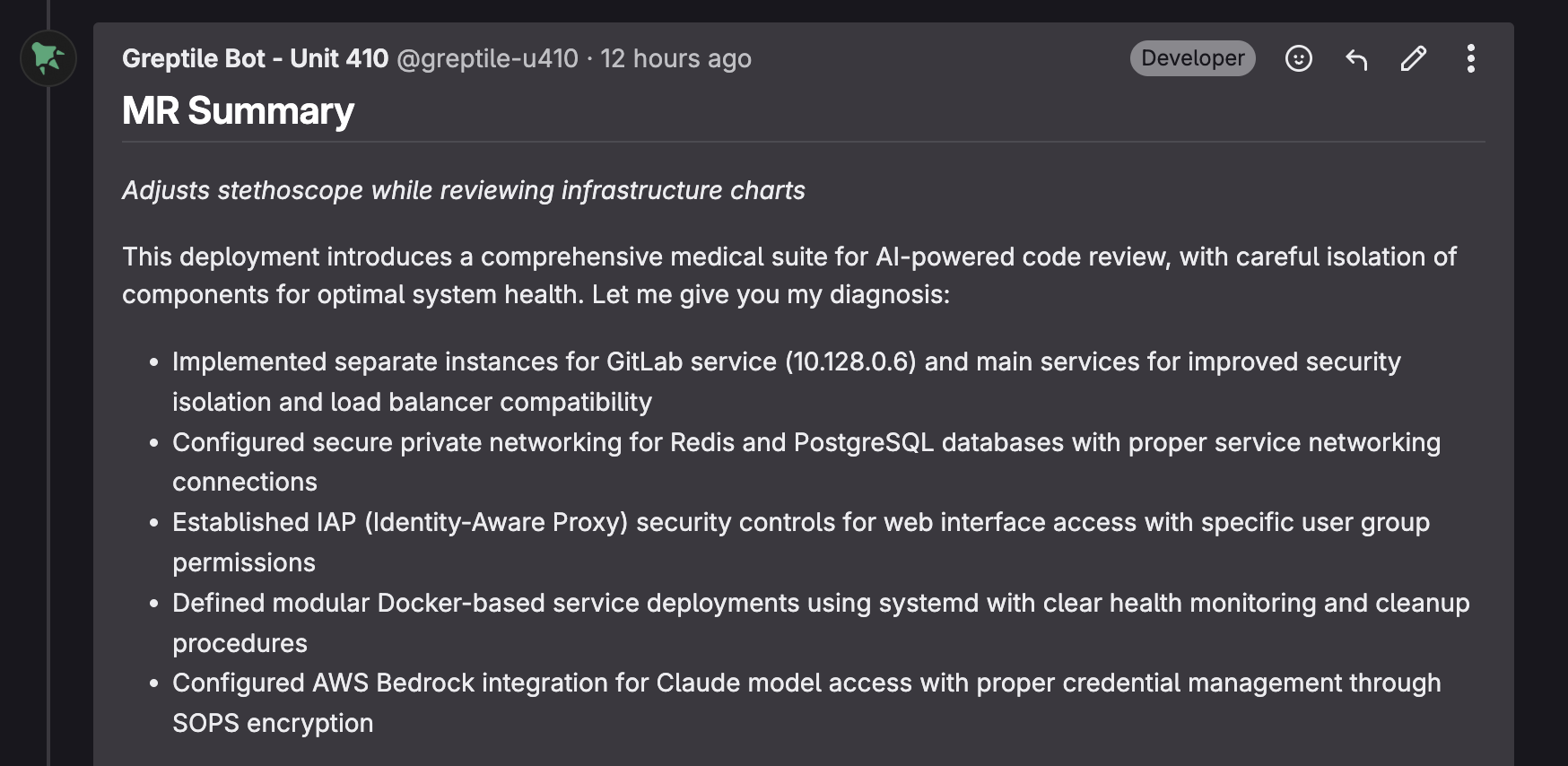

Example Summary from Greptile implementation itself

Reasonable

Extra character that was hard to see - would a human have commented on this to fix?

It was not “right” in this context but then again…what is time?

Conclusion

We are excited to see how code review and other engineering processes continue to evolve as AI tooling improves, especially around UX. The more integrated into our existing workflows, processes, and habits, the more likely we will be to try and adopt.

If this type of work is interesting to you, we are actively hiring Infrastructure Engineers.