Reducing Git Repository Size Without Deleting History

3AM always comes too soon

By Grant Slape, Technical Lead + Manager

The light from your work phone illuminates your face. A piercing sound rises you from your blissful slumber. It’s happened, your worst nightmare, your primeval fear.

Your monorepo is now too large to be bundled properly. Dependency managers everywhere are failing.

If you encounter such a shock to your slumber, there are some unique wrinkles that might prevent you from taking the more common path of “just truncate the history.” Notably, these may include:

- Committed objects that are intentionally committed and necessary for the repository to correctly function.

- Objects are revised semi-frequently, increasing the history size.

- History is important and cannot be simply truncated for a variety of reasons.

This is something you’ll likely need to fix, and quickly.

Plan of Attack

The first and arguably most important task is to get buy-in from your entire team. Due to the decentralized nature of Git, anyone with a local copy of the repository will be able to undo these changes by pushing their local copy to the remote. Thus, after this migration is completed, all team members will ideally delete their local copies and re-clone.

Second, you’ll need to identify the right tools for the job.

For example, if you have the requirements listed above, you may consider using Git LFS to store your large objects.

Because history is often important, you may not want to use git-filter-repo or BFG Repo-Cleaner.

While both of these tools are generally excellent for their respective use cases, they are generally not fit here.

Instead, consider backing up your existing Git history to an archival repository and simply truncating the history in the primary repository.

Additionally, you can test this process on a test repository first, before applying in production.

This process can be accomplished entirely with git and other common command-line tools—a huge win!

The plan of attack then appears to be:

- Identify all large objects committed to the primary repository

- Migrate all large objects to Git LFS.

- Backup Git history to the archival repository

- Truncate Git history in the primary repository

- Migrate any open merge requests in the primary repository

- Clean Git objects in the primary repository

- Re-clone all local copies of the primary repository

Great! Let’s get started.

Identify Large Objects

It’s easy to understand which files are the largest in a given directory:

du -sh *

However, this doesn’t give us the full picture—we really actually care about what is taking up space in the repository. After some research, we can get a pretty good picture:

# needed for gnumfmt if you are on MacOS

brew install coreutils

git rev-list --objects --all |

git cat-file --batch-check='%(objecttype) %(objectname) %(objectsize) %(rest)' |

sed -n 's/^blob //p' |

sort --numeric-sort --key=2 |

cut -c 1-12,41- |

$(command -v gnumfmt || echo numfmt) --field=2 --to=iec-i --suffix=B --padding=7 --round=nearest

We’ve got our list of files; now it is time to migrate them to Git LFS.

Migrate Large Objects

Git LFS is easy to use, although there are some idiosyncrasies to get used to. First, your team will all need to install Git LFS:

brew install git-lfs

git lfs install

You can also add this filter to your ~/.gitconfig to make your life a little easier:

[filter "lfs"]

clean = git lfs clean %f

smudge = git lfs smudge %f

required = true

We are now ready to migrate our large objects to Git LFS! One quirk to mention before we get started:

- The

git-lfsprogram you invoke to track files operates on filenames - The

.gitattributesfile that is created to track LFS files operates on .gitignore patterns

First, let’s import the large objects to Git LFS. This example assumes all large objects are located in the build directory:

git lfs migrate import --no-rewrite "build/**/*" -m "migrate large objects to git-lfs"

Breaking down this command:

git lfs migrate importwill import the specified files to Git LFS--no-rewritespecifies that history should not be rewritten and that a new commit should be created"build/**/*"is being expanded by our shell to the full list of file names (remember our note on quirks before?)-m "migrate large objects to git-lfs"will be our commit message

This should both create a .gitattributes file as well as migrating the large objects to Git LFS. We’ll use this commit as our starting point for the truncation process.

Backup Git History

Now let’s push the current history to the archival repository:

- Merge your work from Migration to your base branch. We’ll assume it is called

main - Create the archival repository in your cloud git provider of choice. We’ll use GitLab here and assume the repository is called

my-repo-archive - Push the history, including tags:

git remote add archive [email protected]:my-org/my-repo-archive.git git checkout main git push archive main git push archive --tags

Truncate Git History

Now that our history is backed up, we can start truncating history on the primary repository. A quick note:

All actions from this point will rewrite your git history! Carefully review each command you’ll run and ensure you understand what is going on. You can generally recover from the backup you created; however, this would be time-consuming.

#!/usr/bin/env bash

# Replace with your known good commit from "Migration"

commit_hash=deadbeef

git checkout --orphan temp $commit_hash

git commit --no-verify

git rebase --onto temp $commit_hash

# Verify there are no differences

git diff HEAD origin/main

# Record the new commit hash

git rev-parse HEAD

# Push the changes to the remote, writing the temp branch locally to the remote main

git push -fu origin temp:main

Migrate Open Merge Requests

If there were open merge requests in the repository, we’ll need to migrate them. We’ll need our new commit hash from Truncate, and then we’ll just need to cherry-pick each commit onto the new base commit. Sounds easy, right?

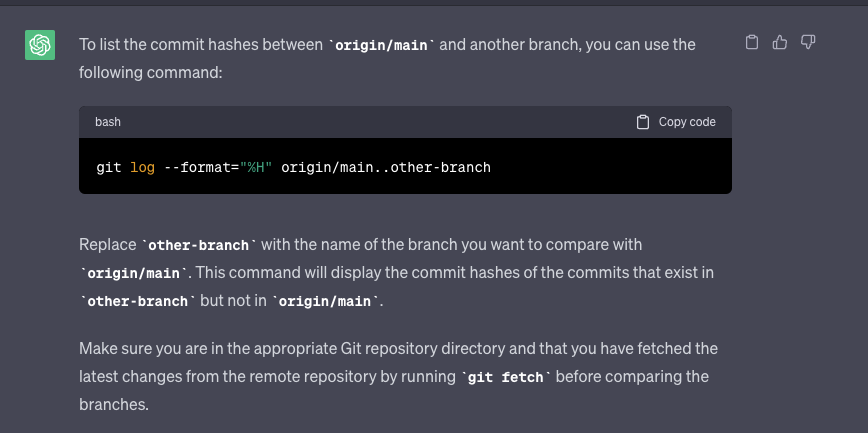

However, what if there are many commits, and many merge requests? We don’t want to do this by hand. There is likely a git command we can use to write a small script. Let’s do some research and see what we find:

Great, exactly what we wanted!

As always, you should independently verify that AI generated responses are accurate and reasonable.

In this case, we can easily run this command and confirm that it provides a list of commits that differ between origin/main and our targeted branch.

Putting everything together:

#!/usr/bin/env bash

# Replace with your targeted branch name

target_branch="my-branch"

# Replace with your new commit hash from "Truncate"

init_commit=cafebeef

# prepare a staging branch

git checkout $init_commit

git checkout -b "$target_branch-2"

# Perform the cherry-pick

for commit in $(git log --format="%H" origin/main..origin/$target_branch); do

git cherry-pick $commit

done

# you may need to resolve merge conflicts. if there are any with lfs binaries, defer to $init_commit # and then fix after cherry-picking

git checkout $init_commit -- build

git cherry-pick --continue

# fix the remote branch

git push -fu origin $(git branch --show-current):$target_branch

Clean Git Objects

Now that we’ve truncated the git history and migrated all open feature branches, we’ll need to clean up:

- Any remaining git objects, so that garbage collection can run on the remote. The git objects in question are:

- Branches

- Tags

- Other references kept by GitLab

- Any references to this repository that depend on a commit hash or a tag

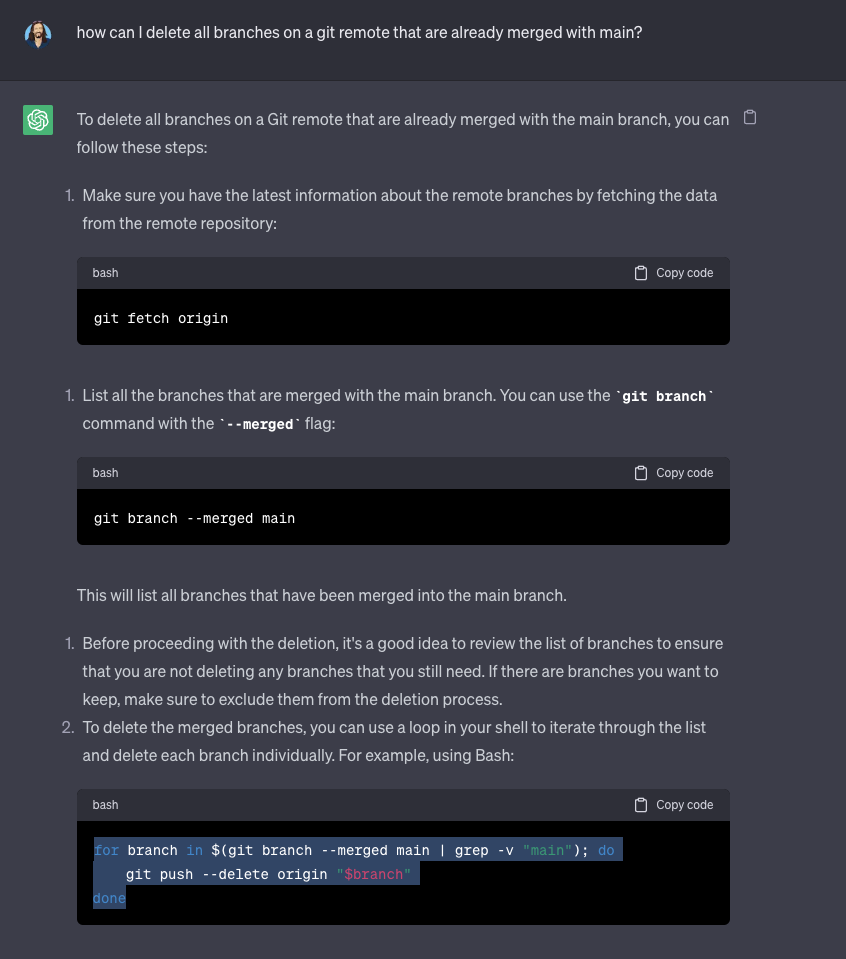

First, let’s delete all branches that are not merged with main, (remember that we already migrated branches that are unmerged with main in Migrate). This seems like something relatively easy to do natively with git:

This seems like what we want!

Verifying with a dry-run:

for branch in $(git branch --merged main | grep -v "main"); do

echo "$branch"

done

This looks well-formed, so let’s go ahead and run on our remote. This may take a while to run if you are not regularly deleting merged branches:

for branch in $(git branch --merged main | grep -v "main"); do

git push --delete origin "$branch"

done

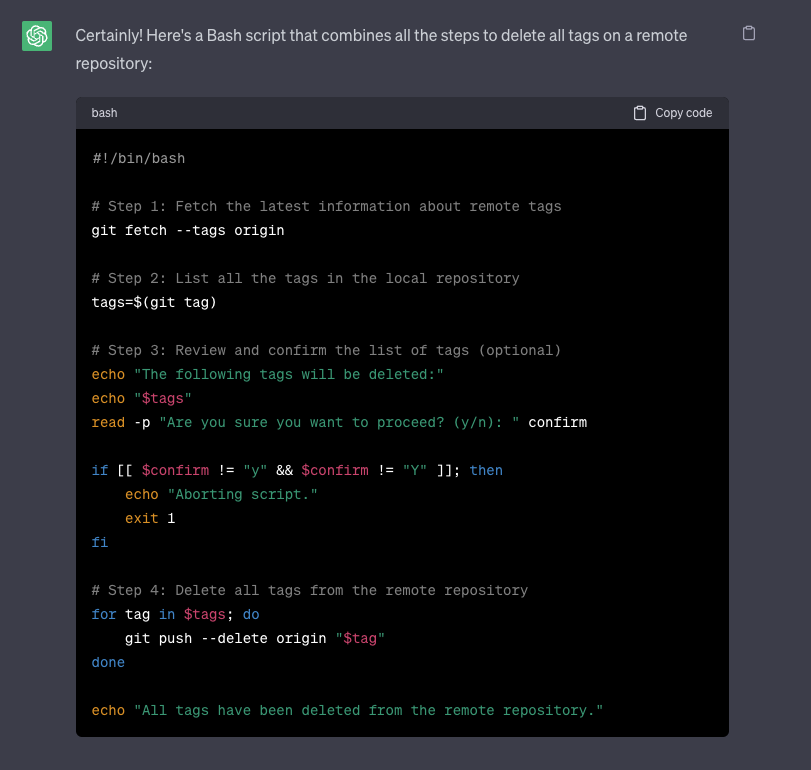

Once branches are deleted, we can delete all tags.

This script is almost what we want. Let’s review and ensure it meets our goals

Let’s fix the shebang to play nice with bash installed from homebrew,

otherwise this script seems to be well-formed and reasonably safe, given that our goal is to delete all tags:

#!/usr/bin/env bash

# Step 1: Fetch the latest information about remote tags

git fetch --tags origin

# Step 2: List all the tags in the local repository

tags=$(git tag)

# Step 3: Review and confirm the list of tags (optional)

echo "The following tags will be deleted:"

echo "$tags"

read -p "Are you sure you want to proceed? (y/n): " confirm

if [[ $confirm != "y" && $confirm != "Y" ]]; then

echo "Aborting script."

exit 1

fi

# Step 4: Delete all tags from the remote repository

for tag in $tags; do

git push --delete origin "$tag"

done

echo "All tags have been deleted from the remote repository."

Now that we’ve cleaned up native git references to orphaned objects, we’ll need to clean up references that are specific to GitLab. For this process, I recommend Reducing repository size by GitLab. Notably, we’ve already pruned branches, tags, and other native git objects and only need to worry about GitLab specific references:

refs/merge-requests/*refs/pipelines/*refs/environments/*refs/keep-around/*

Finally, if you have any other software that is using this repository and referring to it by commit hash, you’ll need to update this software to reference the new commit hash.

In some cases,

you may need

to use the environment variable GIT_LFS_SKIP_SMUDGE to ensure that LFS files are not pulled

(package or dependency managers that rely on git under the hood are especially susceptible to this).

Re-clone the Repository

After the process is completed, your team will need to delete their copy of the repository, and re-clone:

rm -rf my-repo

git clone [email protected]:my-org/my-repo.git

Conclusion

Back to our blissful slumber

While lengthy, this process should ultimately be successful in reducing the size of the affected repository. This is likely to result in a noticeable performance increase if your repository was large and make the migration process ultimately worthwhile. Along the way, you are likely to learn a great deal about git and how it works under the hood, as well as GitLab.

However, such an entire process might be avoided if Git LFS was used in the first place for storing large objects. This reinforces the importance of attempting to understand the full future implications of design choices, even if they are seemingly insignificant at the outset.

If you are interested in growing and challenging your skillset, we have several positions available right now and would be happy to chat.