Google Cloud Functions: What Could Have Been

By Drew Rothstein, President

Back in early 2017, Google Cloud Functions were launched (in Beta) which was about 3yrs after AWS Lambda launched.

There were a lot of reasons that folks pursued these managed services on Public Cloud platforms: no server management, no container builds or orchestration, and some level of (to be learned: problematic) scaling capabilities… just to name a few of the marketing datapoints.

These platforms today are very much matured, have a wide-range of platform support, observability support, and reasonable use cases.

Sadly, as we learned at Unit 410, this became more of a burden on our Infrastructure Team to continue to build and utilize Google Cloud Functions. And this post discusses some of the reasons we chose to migrate these particular services to Google Cloud Run. The lessons learned and examples may also apply in aggregate to AWS Lambda or likely any other FaaS (Function-as-a-Service) offering.

Code, Cloud, Now

Utilizing a FaaS offering, such as Cloud Functions, enables me as an Engineer to write a service quickly, and easily – and get it deployed in record time. There is a larger discussion about why a FaaS enables this over a “standard” development workflow, but that is a different topic altogether and would require more length than the Internet reasonably allows.

Example Cloud Function deployment to get us on-topic:

index.ts:

import {HttpFunction} from '@google-cloud/functions-framework/build/src/functions';

export const helloWorld: HttpFunction = (req, res) => {

res.send("Hello!");

};

tsconfig.json:

{

"compilerOptions": {

"target": "es6",

"module": "commonjs"

},

"include": [

"*.ts"

]

}

package.json:

{

"name": "super-cool-function",

"version": "0.0.1",

"description": "Super Cool Function",

"main": "index.js",

"scripts": {

"build": "tsc",

"start": "functions-framework --target=helloWorld",

"deploy": "gcloud functions deploy helloWorld --runtime nodejs18 --trigger-http --allow-unauthenticated"

},

"dependencies": {

"@google-cloud/functions-framework": "^3"

},

"devDependencies": {

"typescript": "^5",

"@types/node": "^20"

{},

{},

{}

}

That is pretty much it, and you have a working / running service.

All you need is an npm run build && npm run deploy, and you are on your way to the next artisanal coffee shop where you can comfortably say that it is built and deployed while everyone gawks in awe.

Due to this ease-in-onboarding / time-to-launch, we at Unit 410 would commonly prototype small utility services that fall into a couple of categories:

- Query an API and store data

- Query an API and write some monitoring data / output somewhere

- Generate a report from stored data

- Serve up an API in real-time, querying various backends

- Serve up a small App that interacts with a Ledger / Web3 backend

Most of these are small applications, typically less than 1k LoC. By the time you implement packaging (Docker, Bazel, etc.), add a CI/CD configuration, create an encrypted secrets bundle, write a Makefile, etc., the actual LoC is exceeded by Infrastructure components. This is insanity. Even with templated repositories, monorepo or otherwise - there is still a necessary amount of configuration infrastructure to get a service out-the-door in a way that you will want to own and maintain if this thing lives for the next 5-7yrs (which it almost always will). If you chart any new territory – such as trying to auth. to an IAP-protected service, deal with strange rate limits, etc. – then you will most likely exceed the LoC with more Infrastructure configuration and codification.

Functions and You

Commonly, folks would choose a Cloud Function as the deployment as it fits a variety of use cases quite well. Have some TypeScript? No problem. Need to stream a file from GCS? Sure thing. Need to IAM authenticate to some random backend we are hosting? You got it. Would you like to have a local environment where you can prototype before deployment? Totally cool. Don’t want to put together any Packaging? Don’t, just pass --source with an appropriate .gcloudignore.

It just made sense. And over time, 10s of these types of utility and small services would be stood up. The years would tick by, and they would generally run happy as a clam.

As we grew, these services grew organically, and various templated repositories came into existence (e.g., standard modules into our Terraform), and it became very easy and relatively safe to work with. Each additional Infrastructure component became a bolt-on without much hassle.

Example Function + Scheduler Terraform:

module.tf:

variable "network" {

type = string

}

variable "prefix" {

type = string

}

variable "request_path" {

type = string

}

variable "iap_proxy_project" {

type = string

}

variable "backend_service_name" {

type = string

}

data "google_compute_default_service_account" "default" {}

resource "google_cloudfunctions_function" "this" {

name = "${var.prefix}-${var.network}"

runtime = "nodejs18"

available_memory_mb = 1024

trigger_http = true

timeout = 360

entry_point = "main"

max_instances = 3

labels = {

deployment-tool = "cli-gcloud"

}

lifecycle {

ignore_changes = [

environment_variables,

...

]

}

}

resource "google_cloud_scheduler_job" "this" {

name = "${var.prefix}-${var.network}"

region = "us-central1"

schedule = "1 * * * *"

time_zone = "Europe/London"

attempt_deadline = "1800s"

http_target {

http_method = "GET"

headers = {

Content-Type = "application/json"

}

uri = "${google_cloudfunctions_function.this.https_trigger_url}/${var.request_path}"

oidc_token {

audience = google_cloudfunctions_function.this.https_trigger_url

service_account_email = data.google_compute_default_service_account.default.email

}

}

retry_config {

max_backoff_duration = "3600s"

max_doublings = 5

max_retry_duration = "0s"

min_backoff_duration = "5s"

retry_count = 0

}

}

resource "google_iap_web_backend_service_iam_member" "member" {

project = var.iap_proxy_project

web_backend_service = var.backend_service_name

role = "roles/iap.httpsResourceAccessor"

member = "serviceAccount:${data.google_compute_default_service_account.default.email}"

}

main.tf:

module "testnet_function" {

source = "./modules/module"

network = "testnet"

iap_proxy_project = "foo-bar-nodes"

backend_service_name = "foo-bar-rpc-lb-iap-backend-service"

}

As this module and others evolved, it still was as simple as adding ~5 lines of Terraform. And, voilà, you were done on the Infrastructure side.

As an Engineer trying to do secure and novel things, getting the Infrastructure component of how a thing is packaged and deployed out of the way was critical to move quickly. As our tooling continued to evolve, we also had standard Observability tools that were automatically integrated, plus a variety of safety nets exposed should anything fall through the cracks.

We All Hit Limits

Some of our services chugged along over time just fine - a small WebApp that performs some internal utility? All okay. A small service that makes one query per day to automate a manual human process previously? Hunky dory. But, some of these services were not architected (see next section) in a way that the deployed environment made sense anymore. For example, hit the CPU or Memory limit? Or, need substantial disk space to process something? Or, need to use a library with some interesting system-level requirements?

Many times, these types of limitations, while known, wouldn’t be obvious when first putting something together to solve a problem. Each of these could be overcome in a variety of ways, but the time, attention required, and, more critically, the lack of standardization in how to solve it, would all rear their heads again and again.

As an example, if you hit a limit of 8GB of RAM and needed more (like 16GB), you could solve this by migrating to a “2nd gen” cloud function. This would start off pretty simple from reading the documentation. You would probably try a deployment with a new staged Terraform module, and a development instance of your service and some debugging flags to keep an eye on what is happening. But, you would hit your first issue pretty quickly- it looks like Google changed-out the registry from Container Registry to Artifact Registry. You would then start reading the documentation for Artifact Registry and realize you opened a can-of-worms so large that a barrel-of-monkeys in comparison looks small. Migrating to Artifact Registry would probably have been simpler if you didn’t have Terraform and a variety of automation that integrated your Image Registry with Vulnerability Management tooling, archiving / backups of images, monitoring for SHA-comparison, and alerting on image changes, to name a few.

A simple bump of memory has turned into a side project that will likely require more Infrastructure work than writing of the past X number of Cloud Functions. This was a simple memory increase, but what about needing more disk space or system-level requirement changes? Sadly, it is a similar story with no clear path from either Google Cloud or Amazon Web Services. This is why Infrastructure Engineers exist – to help solve these issues. But shouldn’t you have been offered this information up-front? Yes.

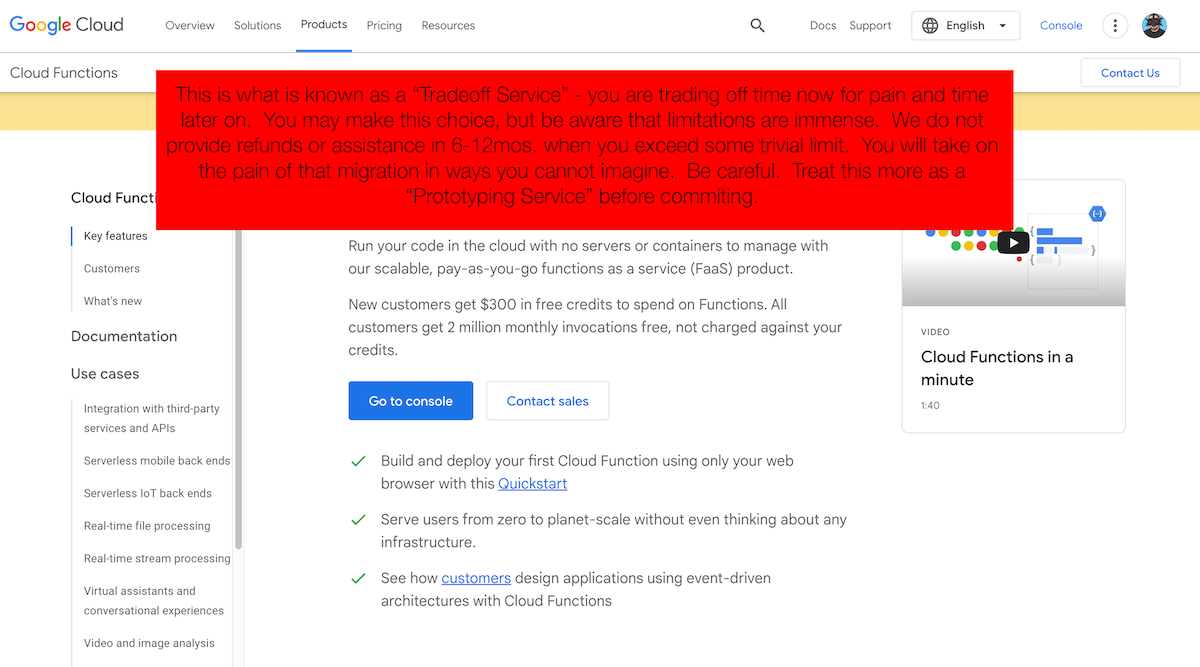

Tradeoffs Unbeknownst

The Product Managers for FaaS products should be empowered to have giant red warning banners at the top of all documentation for these products.

I would propose the following:

This would be more real. And, it would prevent a lot of pain for everyone involved when the basic evolution of your software evolves.

What is most confusing and not clearly articulated by any Public Cloud provider (or, not well understood) is that your Infrastructure choices are all about the tradeoffs. In the case of FaaS, you are simply getting out the door quickly by ignoring most of the Infrastructure complexities by bypassing the planning and discussion now. This may be perfectly acceptable for your service(s) depending on their purpose, timeline, etc., but the lack of clear communication is abysmal and missing.

Really, GCP?

The final straw that broke the camel’s back for us using Cloud Functions was pretty simple: this entire situation caused us much pain and suffering. This came at us in unexpected ways and at unexpected times.

We hit all the common issues summarized above:

- We exceeded Memory and CPU limits on Gen1 functions.

- We needed more disk space.

- We required more low-level system dependencies that required more complete packaging.

- Etc.

The unforced errors, however, were the ones that caught our attention and triggered a change of course before there was a real, serious problem. The first was build-system related.

Google’s product broke several of our builds with no notification (e.g. Email or Account Manager) in early 2023 with this notification that we later found. They often happily send us a notification email that is often useless, but they didn’t seek to notify us about this change.

The second was also build-system related. After a variety of back/forth with Support and Account Managers (no one was able to actually explain it), the problem simply vanished. This was related to underlying libraries that we don’t control in their build system that resulted in traces such as:

File "/layers/google.python.pip/pip/lib/python3.8/site-packages/werkzeug/_reloader.py", line 315, in __init__

from watchdog.events import EVENT_TYPE_OPENED

ImportError: cannot import name 'EVENT_TYPE_OPENED' from 'watchdog.events' (/layers/google.python.pip/pip/lib/python3.8/site-packages/watchdog/events.py)

The last for us was when we first experienced the migration from a Gen1 to Gen2 Cloud Function. We updated all of our Terraform, updated the flags, and fixed a couple obvious problems that needed optimization due to the now available system resources we were alleviating.

A Gen2 Cloud Function is actually a Cloud Run service. This is fairly confusing - not for us humans (after you grok it), but for tooling and automation that had been built over several years. You still interact with the service as a Cloud Function, but it deploys a Cloud Run service on the backend that is actually running slightly differently and requires different IAM, different monitoring, etc.

It’d be interesting to understand this design choice and why this path was chosen vs. a more segregated continued offering. Maybe it was a subtle plan to get us to do what we actually did? Maybe it was tested and found to be the most reasonable path for most customers? I am truly not sure. But, I can’t imagine that any service provider writing tooling and investing in their Infrastructure appreciated this particular path and would have preferred something much different.

Path Forward

After the aforementioned experience, we set sail for the future of Cloud Run Landia. In this land, we set sail towards what seemed both beautiful and painful, but we knew the time had come to tackle our fears and launch into this no longer avoidable work.

By migrating to Cloud Run, we would have to solve (for good reason) a few problems:

- Own our packaging in all respects. A detailed list of

.gitignore,.gcloudignore,.dockerignore, andcloudbuild.yamlto name a few of our now explicit configurations. - Own our build environment. We wouldn’t rely on passing in source code to Google to let it build however it felt that day.

- Own our deployment environment. We wouldn’t rely on various invisible tunables that Google kindly offered and instead would configure our various settings for scaling and usage.

This was a pretty quick burn-down for us. It was completed in just a few cycles / sprints. We would take a bite out of a service and also take on the effort to upgrade the runtime, the dependencies, and in some cases simply re-write the tool! Again, these are relatively small services, and, when a service does its job, you may not need to touch it for 2, 3, or even 4 years except for dependency updates.

Most of these migrations were pretty small and required a pretty small list of changes. But some were much more massive depending on what we were willing to bring into scope.

An example of a small migration:

main.py:

- def main(request):

+ def main():

README.md:

- ## Function

+ ## Service

- This operates as a Cloud Function that is triggered from a Cloud Schedule.

+ This operates as a Cloud Run service that is triggered from a Cloud Schedule.

Makefile:

- deploy: ensure-environment envvars-exist lint deploy-function record-deploy

+ deploy: ensure-environment envvars-exist lint deploy-service record-deploy

Dockerfile:

+ FROM python:3.10-slim

+

+ ENV PYTHONUNBUFFERED True

+

+ ENV APP_HOME /app

+ WORKDIR $APP_HOME

+ COPY . ./

+

+ RUN pip install --no-cache-dir -r requirements.txt

+

+ CMD exec gunicorn --bind :$PORT --workers 1 --threads 8 --timeout 0 main:app

Conclusion

As we neared completion, we would run the following to take a look at what remained each day and confirm we really did complete everything. As we have previously posted, we utilize Continuous Deployment (CD) for a lot of our services on a self-hosted platform. Auditing became important to make sure that all of our triggers in CD were working as expected.

Auditing script:

PROJECTS=$(gcloud projects list --format="value(projectId)" --quiet)

# Iterate through each project

for PROJECT in $PROJECTS; do

# List all Cloud Functions in the current project, using the --project flag

# Redirect standard error to /dev/null to hide errors

FUNCTIONS=$(gcloud functions list --project $PROJECT --format="table[box](name, status, trigger)" --quiet 2>/dev/null)

# Check if the output is non-empty

if [ -n "$FUNCTIONS" ]; then

echo "Cloud Functions in project: $PROJECT"

echo "$FUNCTIONS"

fi

done

Our journey to Cloud Run may not make sense for all cases and all teams. But we thought that by sharing some of the pitfalls of FaaS platforms and, more specifically, a way out of them (and that it is possible!), we could help others to head down this path as appropriate.

With Cloud Run (and similarly, AWS Fargate), there are still various “fun” issues. Similar to the tradeoffs above, maybe there should be a similar disclaimer to these services.

Building great internal tools, libraries, and Infrastructure components is what makes software engineering challenging in the modern age of Public Cloud. Having the access and ability to nearly instantaneously come up with an idea, write a service, and deploy it before your 3rd coffee is pretty amazing - no capacity planning needed, just a credit card.

If migrations like this, and building the best technology to advance secure and novel crypto participation is interesting to you - reach out to us here.